This might seem a little off topic, but the web browser could be considered one of your primary development tools. Just think how much time you actually spend in a browser: researching, reading, testing, debugging, or simply wasting time! After playing with a few Chrome Extensions, I realized just how much more efficient I could be. I liked the way Chrome integrates information and makes it useful, which is essentially Google’s Mission.

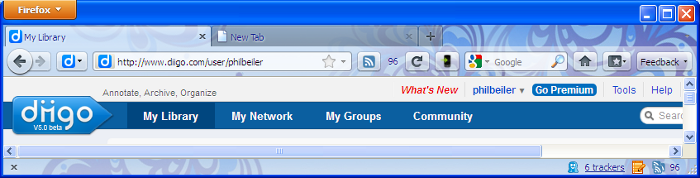

I had been a loyal Firefox user for many years. Firefox provided a nice cross-platform experience between Windows and Linux, and was highly extensible. This extensibility was one of the early benefits of Firefox, the ability to add new behavior to the browser through “Add-ons”. Amazingly, I was never a big “Add-on” user, I used a few of them, but did not take advantage or even explore what they could do for me. I used the Delicious Add-on for my bookmarking needs, but recently moved to the Diigo Add-on. I also use Firefox Sync for browser synchronization, including my tabs, bookmarks, history, etc. For posting to my blog, I’m a huge fan of the ScribeFire Add-on. If you happen to do web page development, you have to try the Firebug Add-on. That is basically the extent of my Add-on usage, I did not ask too much from my browser!

Before jumping ship, I upgraded all of my machines to the Firefox 4 Beta. There were numerous technical improvements, but I was primarily focused on pure usability and how the browser could help me be more efficient. Start-up time was one of my biggest Firefox complaints; the browser seemed to have a tendency to bog down over time. The new version seems to have gone through a pretty dramatic user interface overhaul and addressed multiple performance issues, including start-up.

I was pretty happy with the UI changes, preferring the new, but controversial new tab location. The tabs are now located over-top of the navigation tool-bar; there was apparently quite a bit of debate on this little change! I prefer having two control rows at the top of the browser window, one row for tabs and the another row for navigation, apps, and widgets. I have seen a lot of content about these “web apps”, but it seems a little like pure marketing to me! The Firefox implementation, App Tabs, appear to be little more than a space saving short-cut; however, I can see them providing value for highly used web sites.

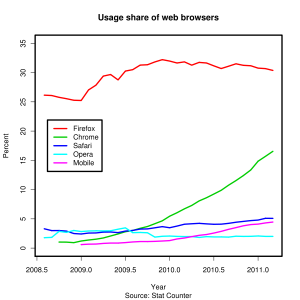

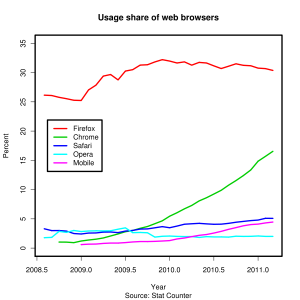

I had installed Chrome a couple of years ago, but was not too excited by it; I saw no compelling reason to change browsers. Wikipedia has an interesting graph of web browser usage; I was really amazed to see how the Chrome market share has taken off in the last twelve months. Even on my own blog, Chrome accounts for almost 25% of the traffic. I installed the newest version of Chrome last week and was immediately hooked. Unfortunately, I have become a true Google convert. It started with the purchase of my Android phone and there was no looking back. I am not saying that the following activities can or cannot be done in Firefox, I’m simply saying that I like everything better in Chrome!

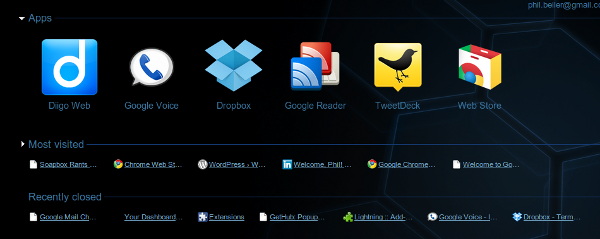

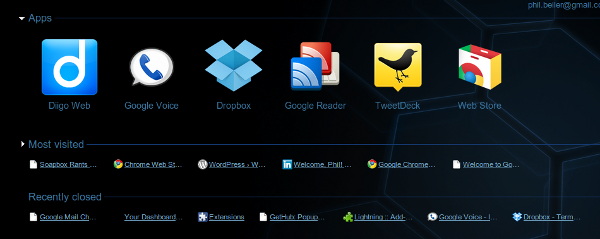

A simple, but extremely cool feature is the “New Tab” behavior. It obviously opens a new tab, but its contents are quite different than you would expect. It is basically divided into three sections, Apps, Most visited, and Recently closed. Under the Apps section, you will see the Web Store icon; does everyone need to have their own app store these days? Anyway, the Web Store is a very well done site, that makes searching and installing new behavior extremely easy, using either applications or extensions.

A simple, but extremely cool feature is the “New Tab” behavior. It obviously opens a new tab, but its contents are quite different than you would expect. It is basically divided into three sections, Apps, Most visited, and Recently closed. Under the Apps section, you will see the Web Store icon; does everyone need to have their own app store these days? Anyway, the Web Store is a very well done site, that makes searching and installing new behavior extremely easy, using either applications or extensions.

Applications seem like fancy bookmarks, but from my reading, they can be (are) a lot more sophisticated. I looked at the SlideRocket app, it was genuinely cool… however, you can also run the app in Firefox! The app concept seems analogous to a rich user interface experience, one that performs like a real desktop application, rather than a collection of old-fashioned HTML pages.

My favorite feature of Chrome has to be the Extensions. Extensions add additional behavior to the browser itself. You can see from the picture to the right, I have added quite a few of them! They integrate into the Navigation Bar and look very nice, consuming minimal space while providing significant functionality. They look similar to the icons found in cell phones; many of the extensions have little indicators that track the number of items you need to address. You can find extensions for all of the standard Google tools: Gmail, Reader, Calendar, and even eBay. The Calendar extension is extremely helpful; it will tell you how long until your next appointment and when you mouse over it, it shows you the event details. The WP Stats is another personal favorite; it tells me how many people have looked at my site! Clicking on some icons will navigate you into the corresponding website, much like a short cut. Other icons have specific behavior, such as showing you detailed web site access statistics or an enhanced view of your search history. My favorite blogging tool, ScribeFire is also available in Chrome, but the spell checker is not working! I like the placement and interaction of the Chrome extensions much better than the traditional Firefox “Add-on” view, which is typically at the bottom of the browser window; Chrome make the extensions feel more integrated with the browser and part of the actual user experience.

My favorite feature of Chrome has to be the Extensions. Extensions add additional behavior to the browser itself. You can see from the picture to the right, I have added quite a few of them! They integrate into the Navigation Bar and look very nice, consuming minimal space while providing significant functionality. They look similar to the icons found in cell phones; many of the extensions have little indicators that track the number of items you need to address. You can find extensions for all of the standard Google tools: Gmail, Reader, Calendar, and even eBay. The Calendar extension is extremely helpful; it will tell you how long until your next appointment and when you mouse over it, it shows you the event details. The WP Stats is another personal favorite; it tells me how many people have looked at my site! Clicking on some icons will navigate you into the corresponding website, much like a short cut. Other icons have specific behavior, such as showing you detailed web site access statistics or an enhanced view of your search history. My favorite blogging tool, ScribeFire is also available in Chrome, but the spell checker is not working! I like the placement and interaction of the Chrome extensions much better than the traditional Firefox “Add-on” view, which is typically at the bottom of the browser window; Chrome make the extensions feel more integrated with the browser and part of the actual user experience.

My final Chrome praise is the synchronization with my Google account. It is pretty cool to watch an extension get automatically installed on my Windows machine, simply by installing it on my Linux machine. No restart or refresh required, it just automatically shows up! I did notice one small oddity, I still had to configure the extension on the Windows machine. This seems rather strange, maybe it is a bug…. I assumed that Chrome would save each of the extension’s settings and synchronize them too. Even with this little shortcoming, there is no going back to Firefox for me, I hope you give it a try too!

I recently attended a couple of webinars that focused on the relationship between social media and recruiting. It was very interesting how much emphasis was put on your Internet Presence. LinkedIn was the obviously leader in professional networking. The speaker actually said your LinkedIn profile is 10 times more important than your resume. I’m not sure I completely believe that point, at least not until I can apply for a job opportunity with my LinkedIn profile URL!!! However, there must be some truth to the statement; they also said less than 10% of jobs are filled by randomly submitted resumes, via job websites. Most jobs are obtained via your professional network, almost eliminating the need for an actual paper resume. With so much information available about you on the Internet, it is just easier to Google someone. If a perspective hiring manager Googles your name, what exactly will they find? An interesting question…. Are you completely anonymous, with no Internet presence at all? You have a LinkedIn profile, but it is essentially empty and your profile picture is of Rover, your favorite pet. What exactly does that say about you, professionally? Internet Presence, that was exact reason I started this blog. In the back of my mind, I thought that blogging would be a great, personal communication tool. How better could I share my thoughts, ideas, and lessons learned? It hopefully communicates what I believe is important, from a typically professional perspective.

I recently attended a couple of webinars that focused on the relationship between social media and recruiting. It was very interesting how much emphasis was put on your Internet Presence. LinkedIn was the obviously leader in professional networking. The speaker actually said your LinkedIn profile is 10 times more important than your resume. I’m not sure I completely believe that point, at least not until I can apply for a job opportunity with my LinkedIn profile URL!!! However, there must be some truth to the statement; they also said less than 10% of jobs are filled by randomly submitted resumes, via job websites. Most jobs are obtained via your professional network, almost eliminating the need for an actual paper resume. With so much information available about you on the Internet, it is just easier to Google someone. If a perspective hiring manager Googles your name, what exactly will they find? An interesting question…. Are you completely anonymous, with no Internet presence at all? You have a LinkedIn profile, but it is essentially empty and your profile picture is of Rover, your favorite pet. What exactly does that say about you, professionally? Internet Presence, that was exact reason I started this blog. In the back of my mind, I thought that blogging would be a great, personal communication tool. How better could I share my thoughts, ideas, and lessons learned? It hopefully communicates what I believe is important, from a typically professional perspective. On to PingTags; a new service, which I believe started earlier this year. It is a pretty simple, generate a QR code and link it with your LinkedIn profile. Give it a try, scan the code to the left! After spending way too much time on my resume and LinkedIn profile, I was rather intrigued by this idea. I have seen QR codes in magazines, but was never excited by them. They obviously are bound to a paper world and I’m not really much of a paper person. At least 95% of my reading is done through my phone or Kindle, which essentially eliminates the need for scanning QR codes, I just click the link! So, what is the point of PingTags? What about your business card? That was another interesting suggestion from the webinar; they recommended that everyone have a personal business card, something you can easily share with the people you meet. I honestly have not had a business card in over 15 years. A personal business card is something that I really never thought about, but it does seem like a good idea; and that is exactly what PingTags wants you do to… put the QR code on the back of your business card. I actually thought was kind of cool, in a geeky way! Flip over the card, scan it, and navigate to a nicely formatted mobile version of your profile. Not sure where I will go with this, just good information to know!

On to PingTags; a new service, which I believe started earlier this year. It is a pretty simple, generate a QR code and link it with your LinkedIn profile. Give it a try, scan the code to the left! After spending way too much time on my resume and LinkedIn profile, I was rather intrigued by this idea. I have seen QR codes in magazines, but was never excited by them. They obviously are bound to a paper world and I’m not really much of a paper person. At least 95% of my reading is done through my phone or Kindle, which essentially eliminates the need for scanning QR codes, I just click the link! So, what is the point of PingTags? What about your business card? That was another interesting suggestion from the webinar; they recommended that everyone have a personal business card, something you can easily share with the people you meet. I honestly have not had a business card in over 15 years. A personal business card is something that I really never thought about, but it does seem like a good idea; and that is exactly what PingTags wants you do to… put the QR code on the back of your business card. I actually thought was kind of cool, in a geeky way! Flip over the card, scan it, and navigate to a nicely formatted mobile version of your profile. Not sure where I will go with this, just good information to know!

I’m not exactly sure how

I’m not exactly sure how

A simple, but extremely cool feature is the “New Tab” behavior. It obviously opens a new tab, but its contents are quite different than you would expect. It is basically divided into three sections, Apps, Most visited, and Recently closed. Under the Apps section, you will see the Web Store icon; does everyone need to have their own app store these days? Anyway, the Web Store is a very well done site, that makes searching and installing new behavior extremely easy, using either applications or extensions.

A simple, but extremely cool feature is the “New Tab” behavior. It obviously opens a new tab, but its contents are quite different than you would expect. It is basically divided into three sections, Apps, Most visited, and Recently closed. Under the Apps section, you will see the Web Store icon; does everyone need to have their own app store these days? Anyway, the Web Store is a very well done site, that makes searching and installing new behavior extremely easy, using either applications or extensions. My favorite feature of Chrome has to be the Extensions. Extensions add additional behavior to the browser itself. You can see from the picture to the right, I have added quite a few of them! They integrate into the Navigation Bar and look very nice, consuming minimal space while providing significant functionality. They look similar to the icons found in cell phones; many of the extensions have little indicators that track the number of items you need to address. You can find extensions for all of the standard Google tools: Gmail, Reader, Calendar, and even eBay. The Calendar extension is extremely helpful; it will tell you how long until your next appointment and when you mouse over it, it shows you the event details. The WP Stats is another personal favorite; it tells me how many people have looked at my site! Clicking on some icons will navigate you into the corresponding website, much like a short cut. Other icons have specific behavior, such as showing you detailed web site access statistics or an enhanced view of your search history. My favorite blogging tool, ScribeFire is also available in Chrome, but the spell checker is not working! I like the placement and interaction of the Chrome extensions much better than the traditional Firefox “Add-on” view, which is typically at the bottom of the browser window; Chrome make the extensions feel more integrated with the browser and part of the actual user experience.

My favorite feature of Chrome has to be the Extensions. Extensions add additional behavior to the browser itself. You can see from the picture to the right, I have added quite a few of them! They integrate into the Navigation Bar and look very nice, consuming minimal space while providing significant functionality. They look similar to the icons found in cell phones; many of the extensions have little indicators that track the number of items you need to address. You can find extensions for all of the standard Google tools: Gmail, Reader, Calendar, and even eBay. The Calendar extension is extremely helpful; it will tell you how long until your next appointment and when you mouse over it, it shows you the event details. The WP Stats is another personal favorite; it tells me how many people have looked at my site! Clicking on some icons will navigate you into the corresponding website, much like a short cut. Other icons have specific behavior, such as showing you detailed web site access statistics or an enhanced view of your search history. My favorite blogging tool, ScribeFire is also available in Chrome, but the spell checker is not working! I like the placement and interaction of the Chrome extensions much better than the traditional Firefox “Add-on” view, which is typically at the bottom of the browser window; Chrome make the extensions feel more integrated with the browser and part of the actual user experience.

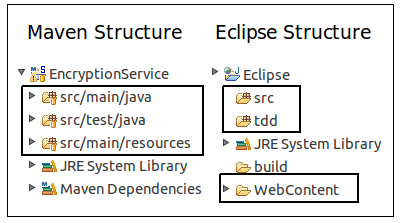

I never liked the required Maven directory structure; I must have subconsciously adopted the Eclipse project structure as my personal standard. Converting the project’s directory structure was trivial and soon realized it was not that big deal after all; I aways separate my source and test files anyway. The main difference is everything lives under the source root, including the WebContent directory. I’m not sure what the “preferred” process is for loading a Maven project into Eclipse, probably using the Maven

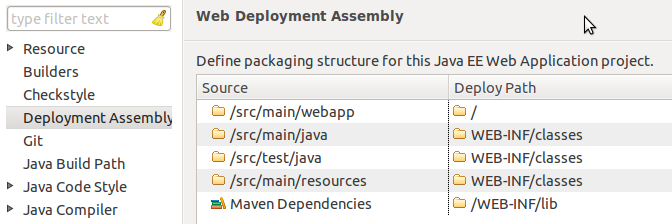

I never liked the required Maven directory structure; I must have subconsciously adopted the Eclipse project structure as my personal standard. Converting the project’s directory structure was trivial and soon realized it was not that big deal after all; I aways separate my source and test files anyway. The main difference is everything lives under the source root, including the WebContent directory. I’m not sure what the “preferred” process is for loading a Maven project into Eclipse, probably using the Maven  With the addition of the Eclipse “Deployment Assembly” options dialog, it is extremely easy manually configure your project. You need to have a Faceted Dynamic Web Module for the “Deployment Assembly” option to be visible, but that should be a fairly simple property change as well. At this point, you should have a fully functional, locally deployable web application.

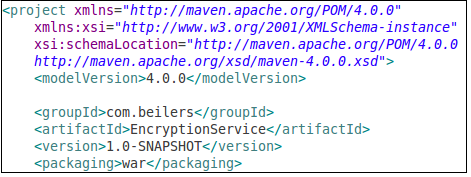

With the addition of the Eclipse “Deployment Assembly” options dialog, it is extremely easy manually configure your project. You need to have a Faceted Dynamic Web Module for the “Deployment Assembly” option to be visible, but that should be a fairly simple property change as well. At this point, you should have a fully functional, locally deployable web application. Now we can ignore Eclipse, and focus on building our WAR file using Maven. Even with the minimalist POM shown to the left (plus your dependencies section), it is possible to compile the code and create a basic WAR file. Use mvn compile to ensure that your code compiles. Using the Maven package goal, the source code is compiled, the unit tests are compiled and executed, and the WAR file is built. All of this functionality, without writing one line of XML!

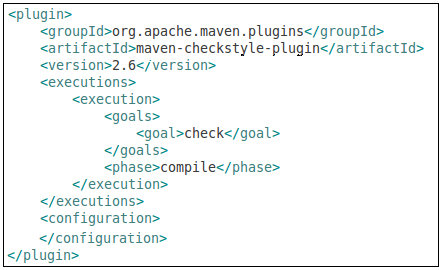

Now we can ignore Eclipse, and focus on building our WAR file using Maven. Even with the minimalist POM shown to the left (plus your dependencies section), it is possible to compile the code and create a basic WAR file. Use mvn compile to ensure that your code compiles. Using the Maven package goal, the source code is compiled, the unit tests are compiled and executed, and the WAR file is built. All of this functionality, without writing one line of XML!  One of the more time consuming parts of an Ant-based build is integrating all of the “extras” typically associated with a project, making them available to the continuous integration server. The extras include: unit test execution, code coverage metrics, and quality verification tools such as Checkstyle, PMD, and FindBugs. This tools are all typically easy to setup, but every project implements them slightly different and never put the results into a standard place! The general process for adding new behavior (tools) to a build appears to be the same for most tools. You simply add the plug-in to the POM and configure it to fire the appropriate goals at the appropriate time in the Maven lifecycle. Ant does not have this lifecycle concept, but it seems like a very elegant way to add behavior into the build. From the following example, I added the Checkstyle tool to the POM. The <executions> section controls what and when the plug-in will be executed. In this example, the check goal will be executed during the compile phase of the build process. Simply executing the compile goal, will cause Checkstyle to be invoked as well. This seems like a very clean integration technique, one that does not cause refactoring ripples.

One of the more time consuming parts of an Ant-based build is integrating all of the “extras” typically associated with a project, making them available to the continuous integration server. The extras include: unit test execution, code coverage metrics, and quality verification tools such as Checkstyle, PMD, and FindBugs. This tools are all typically easy to setup, but every project implements them slightly different and never put the results into a standard place! The general process for adding new behavior (tools) to a build appears to be the same for most tools. You simply add the plug-in to the POM and configure it to fire the appropriate goals at the appropriate time in the Maven lifecycle. Ant does not have this lifecycle concept, but it seems like a very elegant way to add behavior into the build. From the following example, I added the Checkstyle tool to the POM. The <executions> section controls what and when the plug-in will be executed. In this example, the check goal will be executed during the compile phase of the build process. Simply executing the compile goal, will cause Checkstyle to be invoked as well. This seems like a very clean integration technique, one that does not cause refactoring ripples.

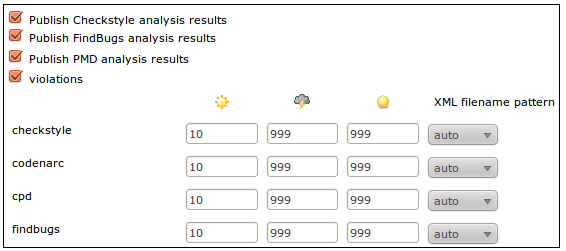

Most development teams also want their project monitored by a Continuous Integration (CI) process. Modern CI tools such as Hudson/Jenkins provide excellent dashboards for reporting a variety of quality metrics. As I previously stated, it is rather time consuming to develop and test the Ant XML required to generate and publish these metrics; combine that with configuring each CI server job to capture these metrics and you have added a fair amount of overhead.

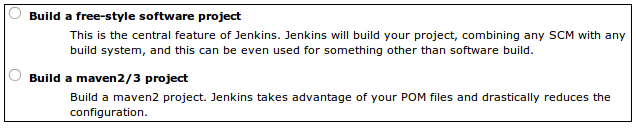

Most development teams also want their project monitored by a Continuous Integration (CI) process. Modern CI tools such as Hudson/Jenkins provide excellent dashboards for reporting a variety of quality metrics. As I previously stated, it is rather time consuming to develop and test the Ant XML required to generate and publish these metrics; combine that with configuring each CI server job to capture these metrics and you have added a fair amount of overhead.  I knew there was support for Maven-based projects within Hudson/Jenkins, but never took the time to understand why it would be beneficial. The main benefit is right there in the description, had I bothered to read it! Configuring a Maven-based job is little more than clicking a few check boxes. No need configure them in Jenkins, using the information provided by Maven, it is basically automatic. This is one interesting aspect of the Hudson-Jenkins fork. Sonatype, the creator of the Nexus Maven Repository manager and the Eclipse Maven plug-in, have chosen the Hudson side of the battle. I wonder what this means for Maven support on the Jenkins side. Obviously, it will not go away, but that might end up being a Hudson advantage in the long run. I still believe that the Jenkins community will quickly out pace the Hudson community.

I knew there was support for Maven-based projects within Hudson/Jenkins, but never took the time to understand why it would be beneficial. The main benefit is right there in the description, had I bothered to read it! Configuring a Maven-based job is little more than clicking a few check boxes. No need configure them in Jenkins, using the information provided by Maven, it is basically automatic. This is one interesting aspect of the Hudson-Jenkins fork. Sonatype, the creator of the Nexus Maven Repository manager and the Eclipse Maven plug-in, have chosen the Hudson side of the battle. I wonder what this means for Maven support on the Jenkins side. Obviously, it will not go away, but that might end up being a Hudson advantage in the long run. I still believe that the Jenkins community will quickly out pace the Hudson community. If you have ever looked at my blog, you know that I’m a huge advocate of Continuous Integration and have been pushing Hudson for several years now. The end of SUN unfortunately had a significant impact on many open-source projects, including Hudson. Support for Hudson continued to work pretty smooth from a user perspective until last December. I don’t think the quality of Hudson changed, but the quality of the releases seemed to change. The Hudson team had been extremely consistent with their weekly releases; the release notes were always updated, the download links always worked, life was good. As 2010 came to an end, something seemed to change; links pointed to the wrong versions, releases seems inconsistent, release notes were out of date or lagged days after. The most obvious indicator was that the community was very quiet… something was happening behind the scenes.

If you have ever looked at my blog, you know that I’m a huge advocate of Continuous Integration and have been pushing Hudson for several years now. The end of SUN unfortunately had a significant impact on many open-source projects, including Hudson. Support for Hudson continued to work pretty smooth from a user perspective until last December. I don’t think the quality of Hudson changed, but the quality of the releases seemed to change. The Hudson team had been extremely consistent with their weekly releases; the release notes were always updated, the download links always worked, life was good. As 2010 came to an end, something seemed to change; links pointed to the wrong versions, releases seems inconsistent, release notes were out of date or lagged days after. The most obvious indicator was that the community was very quiet… something was happening behind the scenes. I thought it would be a really good idea to share interesting interview questions, especially the ones that caught me off guard!

I thought it would be a really good idea to share interesting interview questions, especially the ones that caught me off guard!

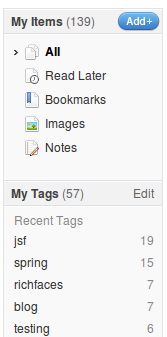

After a lot of Googling for Delicious replacements and I landed on

After a lot of Googling for Delicious replacements and I landed on  Everyone keeps asking me why, what happened to your blog? Why did you stop posting? Unfortunately, it is very complicated answer… I really don’t want to go into the the details. Chances are, you have been in the same boat as me; placed on high profile, transformational project which needed to be done by a specific, non-movable date. It was a rather large project when you consider how it was staffed; project managers, agile coaches, testing teams, analysis team, business liaisons, business consultants, architects, and a few developers. Combine this sizable staff with a new business vision, introduce some new technology requirements (ESB, BPM, Rules), and I think you will get a pretty clear picture of why I stopped blogging! This was a very unique opportunity to finally “do it right”; we were able to complete the system last November/December and successfully migrate to production. It was a very stressful period of time for everyone on the team, spanning many months; this including canceled vacations, working weekends, and extremely long days. This is not really a rant, as we all have to go through these experiences at different times in our career, this is really more about what you can learn and can take away from the experience. Fortunately, I was able to be a student of the process and observed and learned many valuable lessons, and even the reinforcement of many of my fundamental beliefs. Unfortunately, I just did not have the energy to share those thoughts and gave myself a break from blogging. There are several topics that I do hope to go back and revisit, so you will have to keep on reading!!!!

Everyone keeps asking me why, what happened to your blog? Why did you stop posting? Unfortunately, it is very complicated answer… I really don’t want to go into the the details. Chances are, you have been in the same boat as me; placed on high profile, transformational project which needed to be done by a specific, non-movable date. It was a rather large project when you consider how it was staffed; project managers, agile coaches, testing teams, analysis team, business liaisons, business consultants, architects, and a few developers. Combine this sizable staff with a new business vision, introduce some new technology requirements (ESB, BPM, Rules), and I think you will get a pretty clear picture of why I stopped blogging! This was a very unique opportunity to finally “do it right”; we were able to complete the system last November/December and successfully migrate to production. It was a very stressful period of time for everyone on the team, spanning many months; this including canceled vacations, working weekends, and extremely long days. This is not really a rant, as we all have to go through these experiences at different times in our career, this is really more about what you can learn and can take away from the experience. Fortunately, I was able to be a student of the process and observed and learned many valuable lessons, and even the reinforcement of many of my fundamental beliefs. Unfortunately, I just did not have the energy to share those thoughts and gave myself a break from blogging. There are several topics that I do hope to go back and revisit, so you will have to keep on reading!!!! What’s next?

What’s next?